LLaMa2 Chat

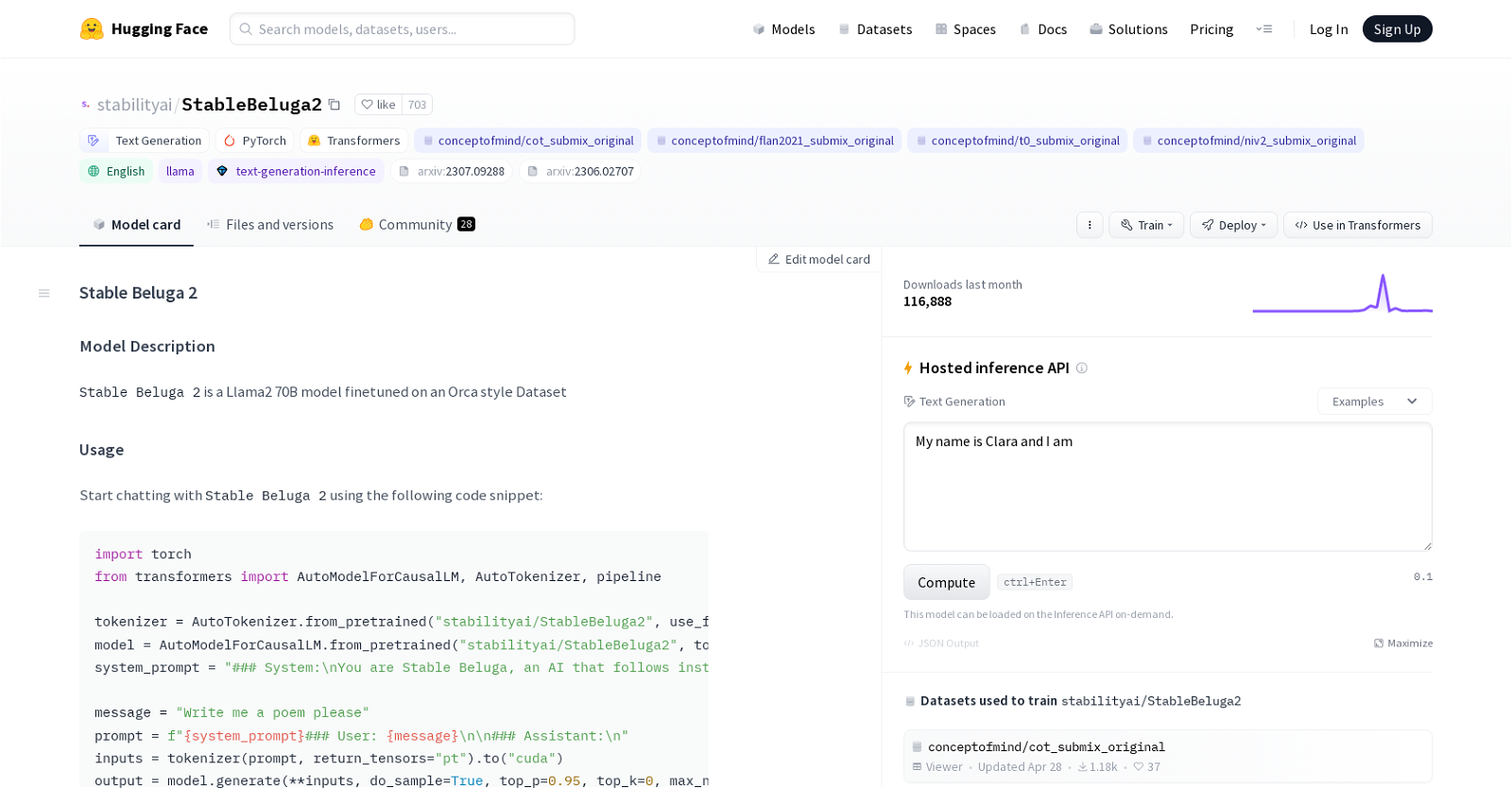

LLaMA2 Chatbot is an innovative alternative to ChatGPT, offering a freely available and open-source conversational AI solution. Powered by the advanced LLaMA2 model, this chatbot represents a paradigm shift in interactive AI, delivering human-like conversations with remarkable precision.

Its unique feature lies in its flexible user settings, allowing for granular control over the bot’s responses. Users can adjust the temperature, top-P, and maximum sequence length to customize the chatbot’s behavior. Higher temperature settings generate more random outcomes, while lower ones maintain predictability.

The top-P parameter influences response diversity, and the maximum sequence length defines the word limit. With its user-centric customization and advanced AI technology, LLaMA2 Chatbot revolutionizes the way we perceive, interact, and utilize conversational AI, providing a more immersive and personalized chat experience.

Moreover, being open-source fosters a collaborative environment for global developers to contribute, driving continuous evolution and improvements in the realm of conversational AI.