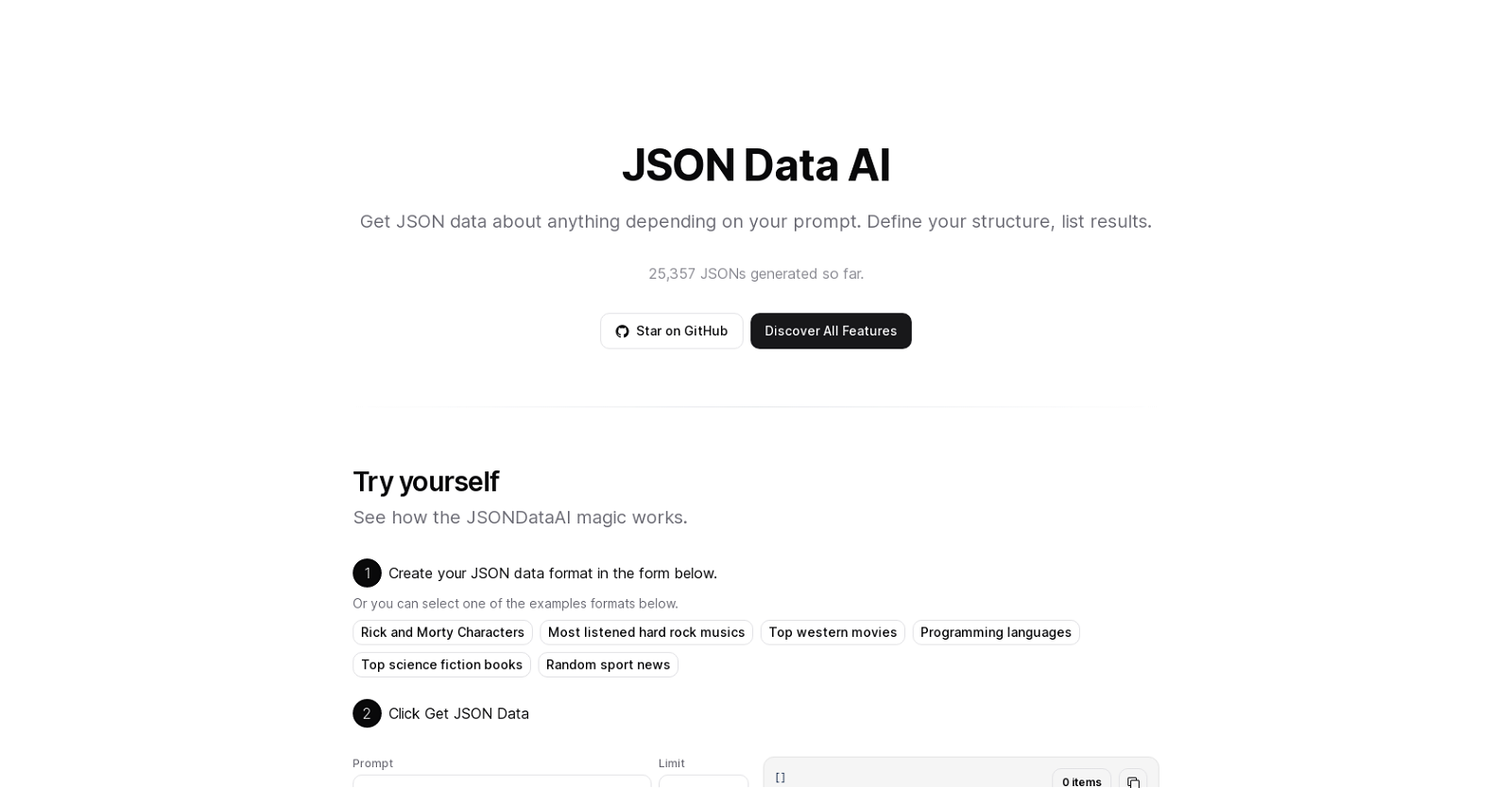

JSON Data

The JSON Data AI tool is a powerful tool that allows users to generate JSON data based on their prompts. With the ability to define the structure of the JSON data and list desired results, users have full control over the output. The tool offers a user-friendly interface where users can easily create their JSON data format using a form. Alternatively, users can choose from a selection of example formats provided, such as Rick and Morty characters, most listened hard rock music, top western movies, programming languages, and top science fiction books, among others.

To generate JSON data, users simply need to input their prompt and configure various parameters like limit, name, type, string, and description. The tool has already generated a substantial number of JSONs, ensuring reliable and diverse results. Once the data is generated, users can easily browse and access it. The tool also supports nested objects, allowing for more complex JSON responses.

For users looking for additional features and enhanced performance, the pro version of the tool offers a range of benefits. These include a more accurate and faster AI model, the ability to save and edit responses, fetch responses as REST API, pagination for realistic use cases, and removing rate limits for unlimited generation and retrieval of responses. These features make the pro version ideal for users with more advanced needs and larger-scale projects.

The JSON Data AI tool is powered by the CHATGPT and VERCEL AI SDK, ensuring high-quality and reliable performance. Users can stay updated with the tool’s creator on Twitter for the latest updates and can also support the project by buying them a coffee. Additionally, interested users can book a call for further assistance, ensuring they have all the guidance they need to make the most of this powerful AI tool.