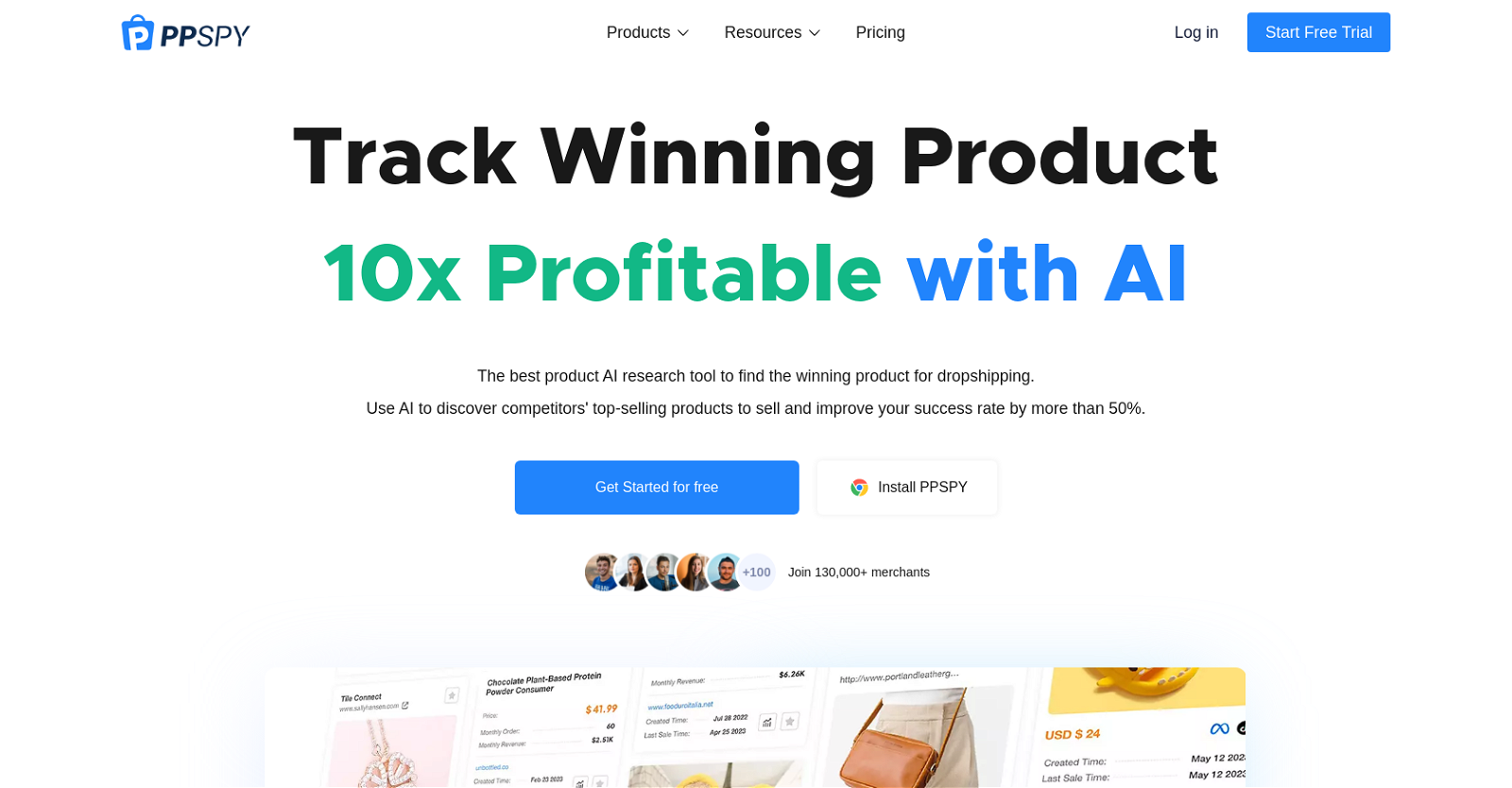

PPSPY is an advanced AI tool designed to provide comprehensive and efficient surveillance solutions for personal and professional use. With its cutting-edge technology and user-friendly interface, PPSPY offers a wide range of features to ensure enhanced monitoring and security.

Key Features:1. Real-time Monitoring: PPSPY enables users to monitor various activities in real-time, including phone calls, text messages, social media interactions, and GPS location. This feature allows for immediate response and intervention when necessary.2. Remote Access: With PPSPY, users can remotely access the target device and gather information without physical contact. This feature proves particularly useful for parents, employers, and individuals concerned about the well-being and safety of their loved ones.3. Multimedia Tracking: The tool allows users to track multimedia files such as photos, videos, and audio recordings stored on the target device. This feature aids in identifying potential threats or inappropriate content.4. App Usage Monitoring: PPSPY provides insights into the applications used on the target device, allowing users to detect any unauthorized or suspicious activities. This feature is especially valuable for employers who want to ensure productivity and prevent data breaches.5. Geofencing: By setting virtual boundaries, PPSPY enables users to receive alerts whenever the target device enters or exits specific locations. This feature proves beneficial for parents monitoring their children’s whereabouts or employers tracking company-owned devices.

Benefits:1. Enhanced Security: PPSPY offers a reliable and efficient solution for safeguarding personal and professional interests. It helps prevent potential risks, such as cyberbullying, unauthorized access, or data leakage.2. Peace of Mind: With PPSPY, users can have peace of mind knowing they have a powerful tool to monitor and protect their loved ones or business assets. The tool provides valuable insights and alerts, ensuring timely intervention when needed.3. User-Friendly Interface: PPSPY boasts an intuitive and easy-to-navigate interface, making it accessible to users of all technical backgrounds. The tool’s simplicity ensures a seamless experience while maximizing its functionality.4. Compatibility: PPSPY is compatible with various operating systems, including Android and iOS, making it accessible to a wide range of users. It supports multiple devices, ensuring flexibility and convenience.5. Privacy and Data Security: PPSPY prioritizes user privacy and data security. All information gathered through the tool is encrypted and protected, ensuring confidentiality and peace of mind.

In summary, PPSPY is an advanced AI tool that offers comprehensive surveillance solutions for personal and professional use. With its real-time monitoring, remote access, and multimedia tracking features, it provides enhanced security and peace of mind. The user-friendly interface, compatibility, and focus on privacy and data security make PPSPY a reliable choice for individuals and organizations seeking efficient monitoring solutions.