Localai

The Local AI Playground is a native app designed to simplify the process of experimenting with AI models locally. It allows users to perform AI experiments without any technical setup, eliminating the need for a dedicated GPU. The tool is free and open-source. With a Rust backend, the local.ai app is memory-efficient and compact, with a size of less than 10MB on Mac M2, Windows, and Linux. The tool offers CPU inferencing capabilities and adapts to available threads, making it suitable for various computing environments. It also supports GGML quantization with options for q4, 5.1, 8, and f16.

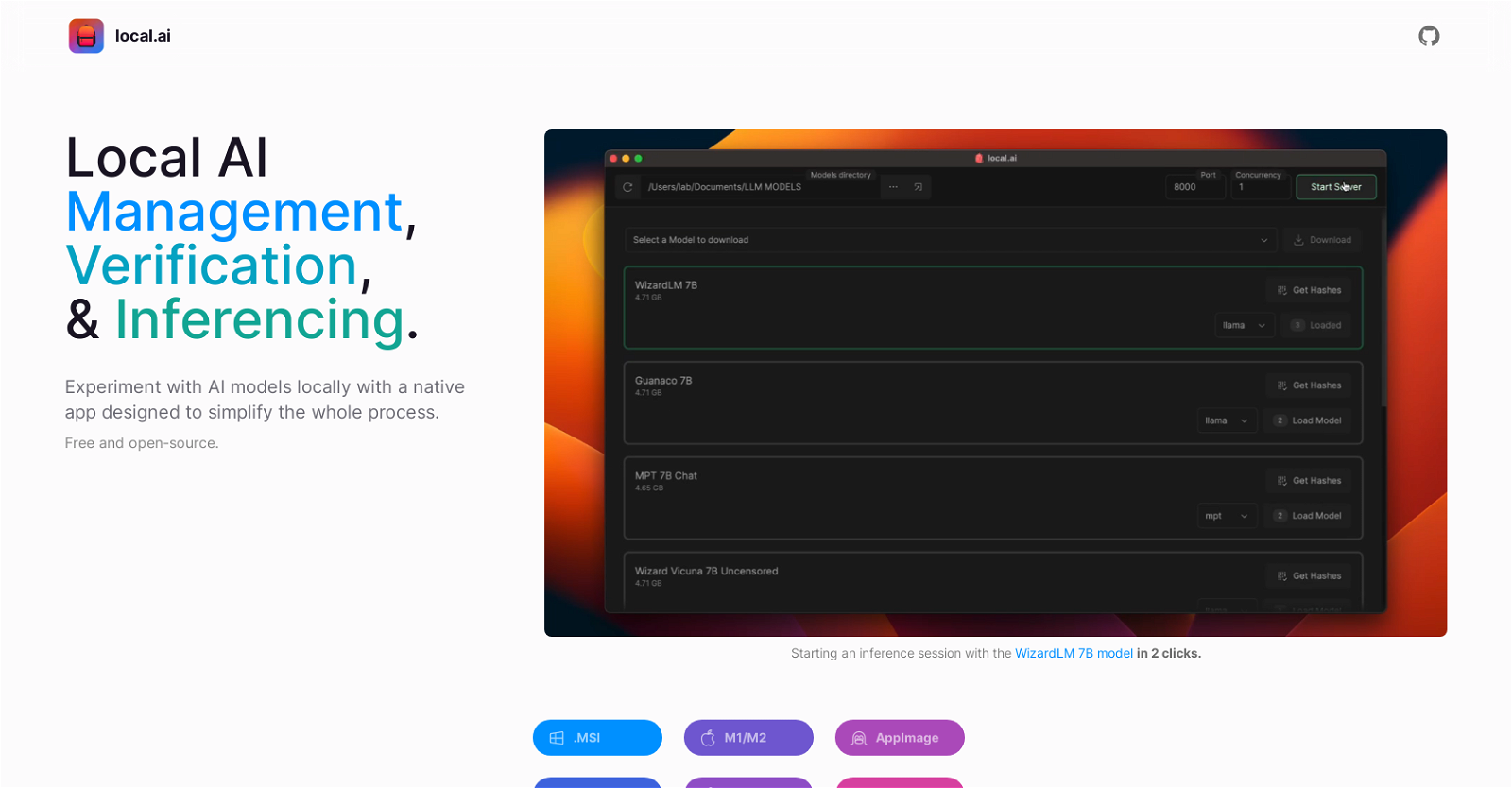

Local AI Playground provides features for model management, allowing users to keep track of their AI models in a centralized location. It offers resumable and concurrent model downloading, usage-based sorting, and is agnostic to the directory structure.

To ensure the integrity of downloaded models, the tool offers a robust digest verification feature using BLAKE3 and SHA256 algorithms. It includes digest computation, a known-good model API, license and usage chips, and a quick check using BLAKE3.

The tool also includes an inferencing server feature, which allows users to start a local streaming server for AI inferencing with just two clicks. It provides a quick inference UI, supports writing to .mdx files, and includes options for inference parameters and remote vocabulary.

Overall, the Local AI Playground provides a user-friendly and efficient environment for local AI experimentation, model management, and inferencing.